Most of those design decisions can be done in a few minutes if you take the time (and if you have received proper training to spot the issues) and those few minutes can results in a big leap in quality. I have to remind myself of my motto and it always results in a better experience for everyone involved with the end product. By the time I've programmed and integrated them in, I'm tired and only want to close the task. It would be very straightforward for me to simply throw components on the screen and call it a day. As a full-stack developer, it's easy for me to see both side of the medal. My personal philosophy is "why do anything if you are not going to do it beautifuly?". Nobody wants to complete a checkout when the entire page looks like the content of a 1995 website in a iframe. One or two small issues is fine, but they pile in really quickly. There is a reason that all top brands have such strong design and user experience/graphic design teams. However, they might start to notice that your main competitor's app feels better and slowly trickle that way. Yes, your app will do fine with minor design issues. If OP's app were to copy the same padding and margins as Reddit's new sidebar, I would have nothing to say against it. The issue is when it's not respecting anything and is simply thrown together randomly.

Nobody is saying that the use of white space is wrong or that having margins and padding is wrong. There are no glaring mistakes when it comes to Reddit's new design. If you fail to convey the structure to the user, their mental model will be wrong and the interface will be hard to use. Margin and padding, alignment, contrast and relative positions define the structure of the interface - in the same way that you use Layout objects behind the curtain to program where every widget is placed, you should use the visual properties of separation, visual weight and rhythm to tell the user which parts are related and what is the hierarchy of the elements. It may perform the expected task, but you wouldn't say it's "right". It's like having a C program that leaks memory. The brain has its own API (see, ) and if you don't optimize the "visual code" to it, the interface may work, but in sub-optimal ways and causing a discomfort to the user. It's not a question of believing the job exists because there's a real need to optimize the visuals.

>do you really believe that or does your job depending on you and others, believing that? More background in recent article here on 40 years of processor performance: He cited Moore's 1975 revision as a precedent for the current deceleration, which results from technical challenges and is "a natural part of the history of Moore's law." Brian Krzanich, CEO of Intel, announced, "Our cadence today is closer to two and a half years than two." Intel is expected to reach the 10 nm node in 2018, a three-year cadence. Intel stated in 2015 that the pace of advancement has slowed, starting at the 22 nm feature width around 2012, and continuing at 14 nm.

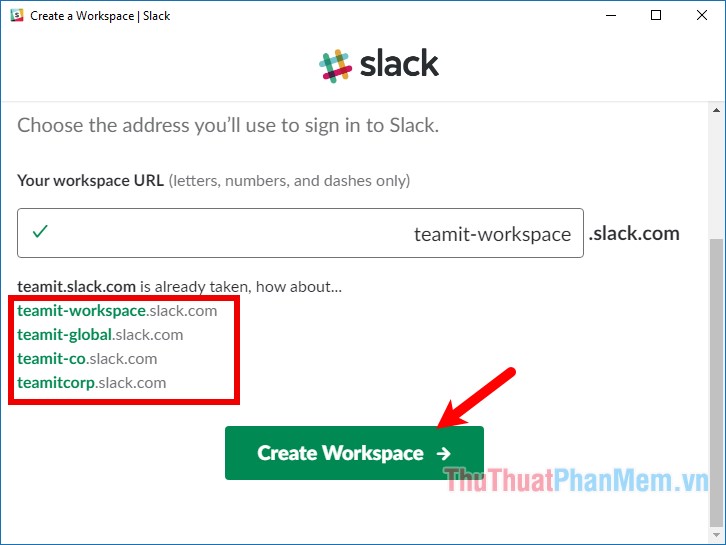

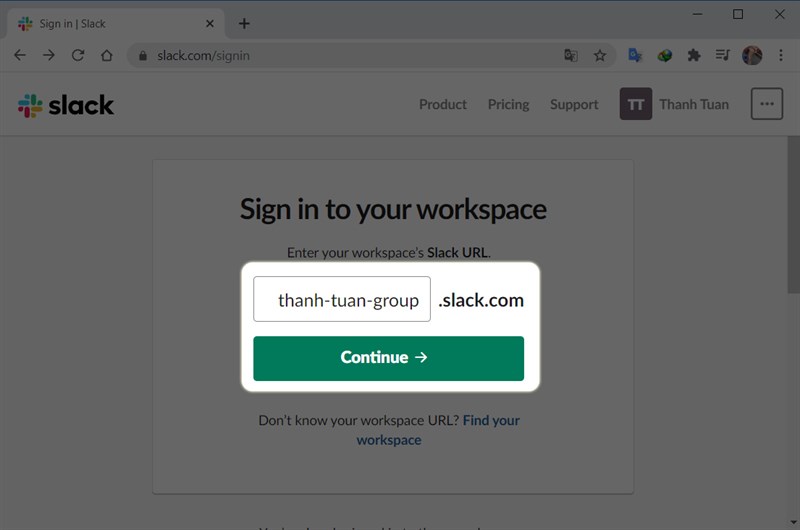

URL SLACK DESKTOP APP UPDATE

For example, the 2010 update to the International Technology Roadmap for Semiconductors, predicted that growth would slow around 2013, and in 2015 Gordon Moore foresaw that the rate of progress would reach saturation: "I see Moore's law dying here in the next decade or so." In general, it is not logically sound to extrapolate from the historical growth rate into the indefinite future. Although the rate held steady from 1975 until around 2012, the rate was faster during the first decade. Moore's law is an observation and projection of an historical trend and not a physical or natural law.

0 kommentar(er)

0 kommentar(er)